No Preference Left Behind: Group Distributional Preference Optimization

Dec 9, 2024·

,

,

,

,

,

,

·

0 min read

,

,

,

,

,

,

·

0 min read

Binwei Yao

Zefan Cai

Yun-Shiuan Chuang

Shanglin Yang

Ming Jiang

Diyi Yang

Junjie Hu

Demonstration of GDPO

Demonstration of GDPO

Abstract

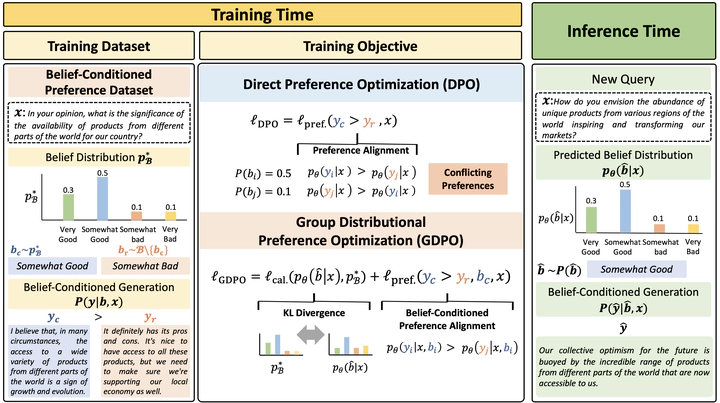

Preferences within a group of people are not uniform but follow a distribution. While existing alignment methods like Direct Preference Optimization (DPO) attempt to steer models to reflect human preferences, they struggle to capture the distributional pluralistic preferences within a group. These methods often skew toward dominant preferences, overlooking the diversity of opinions, especially when conflicting preferences arise. To address this issue, we propose Group Distribution Preference Optimization (GDPO), a novel framework that aligns language models with the distribution of preferences within a group by incorporating the concept of beliefs that shape individual preferences. GDPO calibrates a language model using statistical estimation of the group’s belief distribution and aligns the model with belief-conditioned preferences, offering a more inclusive alignment framework than traditional methods. In experiments using both synthetic controllable opinion generation and real-world movie review datasets, we show that DPO fails to align with the targeted belief distributions, while GDPO consistently reduces this alignment gap during training. Additionally, our evaluation metrics demonstrate that GDPO outperforms existing approaches in aligning with group distributional preferences, marking a significant advance in pluralistic alignment.

Type

Publication

1